Should you use ChatGPT to write at work?

It depends.

Greetings, all.

A few weeks ago, I shared an article I wrote for the Boston Globe about what it might mean for education when students start using AI to draft their papers. Shortly after that, OpenAI released ChatGPT, which is easier to use and more effective than the earlier version of GPT3 that I had been using.

Will I still have a reason to write this newsletter in a world where you can ask ChatGPT for writing hacks? I tested it to see if it can replace me:

Based on this answer, I don’t think I’m obsolete yet. Most of the advice isn’t bad—it’s just generic. I have a more nuanced view of when to use the passive voice than the chatbot. I don’t think it’s very helpful to tell someone to edit their writing or to be more concise without telling them how. My goal with Writing Hacks has been to try to explain how to write more effectively in an actionable and concrete way. Could the chatbot do that? I asked.

I remain underwhelmed. Again, some of the advice is fine—but it’s not much more concrete than the original advice. Some of it is just odd: “The cat chased the mouse” and “The cat had the mouse chased” are not useful examples of active and passive voice; in the second one, the cat seems to have outsourced the chasing, which is a very different—and much more intriguing—scenario. And I would not advise anyone to try to make every sentence contain 15-20 words. That’s arbitrary—and also not short. The previous sentence is 10 words. That last one is six. Sentences should be as long as you need them to be to do what you want them to do. (I’ll write a Hack about that in the new year!)

I don’t think AI will spell the end of human writing—at least not yet. But we’re going to be talking more and more about whether to use it and how to use it effectively. Before we turn to AI to write our emails, memos, and reports, we need to know what problems that would solve for us. Would generating a first draft using AI save time? In some cases, yes. Would it be useful to create templates using ChatGPT that you can then use for the emails you regularly send clients? Perhaps.

I asked ChatGPT to create an email template for me to respond to reader questions, and then I asked it to customize the template for a reader who finds me boring and unhelpful. Would I use the result? No. It doesn’t sound like me. Could someone use it? Yes. I often suggest creating templates for emails that are sent out regularly, and ChatGPT may be a useful way to do this. But you’ll need to be able to judge whether the chatbot versions are effective.

What about using ChatGPT for more important tasks? Could you use it to generate pros and cons for an important decision? Maybe. But even where ChatGPT seems like a good fit, I think it’s worth proceeding with caution and keeping in mind the writing problems AI can’t solve, including the following:

ChatGPT doesn’t know or understand your audience. You can tell it to write to a particular audience, but it doesn’t actually know them. (It’s not human!) You still need to decide what your audience needs to hear—and why. And you need to understand how to make decisions about your tone rather than relying on the chatbot to do it for you.

ChatGPT gets a lot of facts wrong. You need to know what you’re talking about because it will very confidently offer up complete lies.

ChatGPT can’t tell you what you think. Writing, painful as it can be, helps us figure out what we think. I hear this from people across the professions. How do you know if you want to make that investment, choose that path, solve the problem in that way? Sometimes drafting the memo trying to explain your decision is how you figure what decision you want to make.

Again, ChatGPT is not human! We need to talk to each other at work. AI is no substitute for collaboration and communication.

As the technology improves, we’ll figure out where it can and can’t be used effectively. But let’s start by asking what problems it can solve—and what problems it creates.

I wrote more about this topic for the Boston Globe earlier this week. I’m including the article below.

The questions we ought to be asking about ChatGPT

While we talk about the problems AI is going to create, let’s figure out which ones it can actually solve.

Since OpenAI began offering free access to its ChatGPT this month, I’ve come to dread seeing the now familiar ChatGPT screen shots on social media. What has it been able to create now? Another student essay, but better? Yes. The essay assignment itself, followed by a student essay responding to that assignment, a rubric for grading the essay, and the graded version? Yes. A college admissions essay? Yep. Answers to math word problems? Uh huh. Step-by-step instructions for how to grow decorative gourds? I’m sure it can do that too.

It was hard not to enjoy this new toy, and I was among the many tweeting the results of my own experiments, including a pretty good pitch for a Hallmark Christmas movie about a writing teacher who fears she will lose her job to AI, complete with a tech bro love interest who tries to bring her around to the possibilities presented by the technology. It’s astonishing to see what the chatbot can produce. And while many have noted the uneven — and just plain wrong — nature of the academic work it produces, it will get better — fast.

And that’s where the dread comes in for me, the college writing instructor. Unlike my Hallmark movie counterpart, I’m not worried about my job (yet!); I’m concerned because if I were trying to solve the problem of how to help college students become better critical thinkers, and I had a choice between designing a writing-intensive course with thoughtful assignments and an engaged instructor or inventing an AI chatbot to draft and revise their essays, I wouldn’t choose the chatbot. And yet, the machine has arrived. And because it’s going to be so easy to generate drafts with ChatGPT, students are going to use it.

So what will we do? Does this spell the end of the take-home college writing assignment? A shift to oral exams and in-class, handwritten essays? Or will we now incorporate the technology into writing assignments, asking students to use AI as a kind of Grammarly-on-steroids to generate drafts and then react to or edit them? Perhaps those aren’t unreasonable solutions; after all, we’ve always given college students arguments to respond to in the form of assigned readings, lectures, peer review, and guest speakers. But it feels like asking students to incorporate ChatGPT is a solution to the problem created by the existence of the technology and not a solution to the many actual problems they face when learning to think and write.

Will it really be more valuable to react to a draft generated by a machine that has been trained on human ideas than to react to ideas presented by actual humans? What ideas will students miss if they only react to machine-generated drafts rather than creating their own? And if we are now going to be teaching students to edit rather than to write, will they still be able to figure out whether they disagree with ideas generated by AI? Will they care?

Maybe it’s fine to stop asking college students to write (I am not convinced!). But it would be better to make that decision because we decide writing doesn’t matter, or because we decide the college essay assignment is not helping students experience what does matter about writing, or because the machine offers a more effective way to help students think — not just because ChatGPT exists. Some writing assignments are busywork, and I’m all for reevaluating assignments that ask students to jump through hoops to prove they’ve done the reading or are otherwise poorly designed. But if ChatGPT makes it impossible to assign out-of-class writing to students, we won’t lose only the bad assignment. We’ll also lose the assignments that foster thinking, engage real audiences, and show students how to use their voices to argue for what matters to them and to connect with each other.

I have no doubt that letting a machine do the writing will be useful in contexts where it offers a clear solution to a problem. As the technology improves, we may be able to use AI effectively in the classroom to solve some of the problems faced by overburdened K-12 and college instructors. For example, software could offer early, basic feedback on student assignments and free up instructors to work on other issues with students. And we’ll be able to use AI to take on writing tasks we don’t value. I’d be thrilled, for example, if a machine could write up my doctor’s notes for him so that he wouldn’t have to divide his time between typing on his laptop and talking to me during an appointment. If it could interpret IKEA furniture assembly drawings and turn them into clear directions, I would pay to use it.

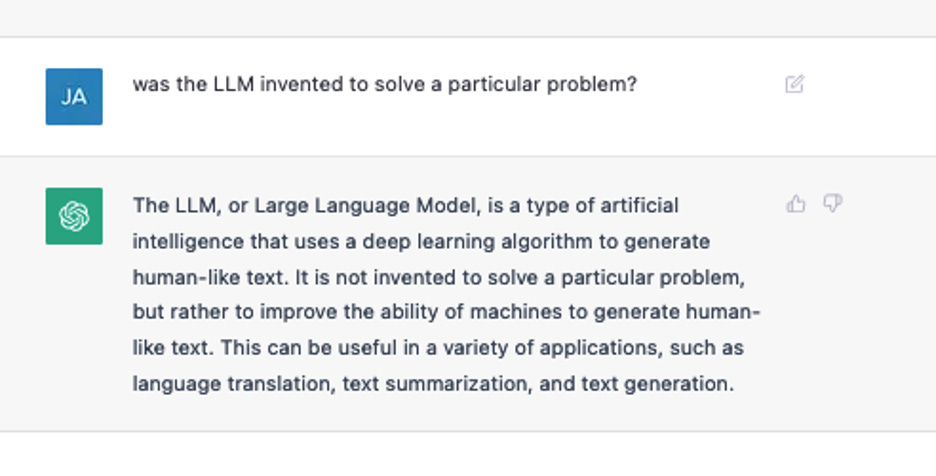

But before we remake the way we teach and learn to accommodate ChatGPT and its successors, we need to talk about what problems this technology can actually solve. If you ask ChatGPT what problems it was designed to solve, it will tell you that it was not, in fact, designed to solve any problems at all; it was designed with the goal of improving “the ability of machines to generate human-like text.” That goal is at odds with my own goal as a teacher, which is to help actual humans make sense of themselves and the world. What if the solution to that problem is not to integrate ChatGPT into the classroom, but instead to rethink how we assess our students and how we define academic success in a system that seems poised to incentivize relying on machine-generated writing in the first place?

I’d love to hear your thoughts on how you are using/plan to use ChatGPT at work.

Coming up in January: Situations where grammar checkers and AI can’t help you.

Happy Holidays!

Jane

![Can you create an email template for me to use to respond to readers questions about my newsletter? Sure! Here's a template that you can use to respond to readers' questions about your newsletter: Dear [reader], Thank you for your email and for your interest in my newsletter. I'm glad to hear that you have a question about it. Regarding your question about [specific topic], [provide a detailed response]. I hope this helps! If you have any further questions or would like to know more about my newsletter, please don't hesitate to reach out. Best, [Your name] Note: Make sure to customize the template with specific details and information that are relevant to your newsletter and the reader's question. Can you create an email template for me to use to respond to readers questions about my newsletter? Sure! Here's a template that you can use to respond to readers' questions about your newsletter: Dear [reader], Thank you for your email and for your interest in my newsletter. I'm glad to hear that you have a question about it. Regarding your question about [specific topic], [provide a detailed response]. I hope this helps! If you have any further questions or would like to know more about my newsletter, please don't hesitate to reach out. Best, [Your name] Note: Make sure to customize the template with specific details and information that are relevant to your newsletter and the reader's question.](https://substackcdn.com/image/fetch/$s_!y16n!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F89e0c1f0-6708-40a7-9565-32a82ecdbf57_832x916.png)

![janerosenzweigwrites@gmail.com Now customize the template for a reader who is complaining that I am boring and not helpful. Sure! Here's a customized version of the template for a reader who is complaining that your newsletter is boring and not helpful: Dear [reader], Thank you for your email and for sharing your feedback about my newsletter. I'm sorry to hear that you find it boring and not helpful. I understand that everyone has different interests and preferences, and it's possible that my newsletter may not be the right fit for you. However, I want to assure you that I strive to provide valuable and informative content in each issue. If there are specific topics or types of information that you would like to see included in my newsletter, please let me know and I will do my best to accommodate your suggestions. Again, I apologize for any frustration that you may have experienced with my newsletter. I appreciate your feedback and will take it into consideration as I continue to improve and evolve the content that I offer. Best, [Your name] janerosenzweigwrites@gmail.com Now customize the template for a reader who is complaining that I am boring and not helpful. Sure! Here's a customized version of the template for a reader who is complaining that your newsletter is boring and not helpful: Dear [reader], Thank you for your email and for sharing your feedback about my newsletter. I'm sorry to hear that you find it boring and not helpful. I understand that everyone has different interests and preferences, and it's possible that my newsletter may not be the right fit for you. However, I want to assure you that I strive to provide valuable and informative content in each issue. If there are specific topics or types of information that you would like to see included in my newsletter, please let me know and I will do my best to accommodate your suggestions. Again, I apologize for any frustration that you may have experienced with my newsletter. I appreciate your feedback and will take it into consideration as I continue to improve and evolve the content that I offer. Best, [Your name]](https://substackcdn.com/image/fetch/$s_!8qJd!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2Fbfccaab7-97fa-488a-82cd-2c02739b07f6_880x1020.png)

my original writing ---rewrite professional:

I find value in running my DMs, comments in tickets, group messages, through ChatGPT. I think ChatGPT polishes my statements and softens my tone. I understand there are different reasons to write and my reasons are to convey my thoughts and hopefully add a perspective that someone hadn't thought about. Does ChatGPT make me a better writer? Am I using ChatGPT as a crutch? I am not sure, but I would draw similarity's between these questions and the questions asked about calculators when I was in junior high school. I use writing as a tool and as such will utilize anything that improves the performance of said tool.

I find it valuable to run my DMs, ticket comments, and group messages through ChatGPT. I think it helps polish my statements and soften my tone. I understand that there are various reasons for writing; my goal is to convey my thoughts clearly and, ideally, offer a perspective that others might not have considered. Does ChatGPT make me a better writer, or am I relying on it as a crutch? I’m not certain, but these questions remind me of the debates around calculators when I was in junior high. I view writing as a tool, and I’m willing to use anything that enhances the effectiveness of that tool. --ChatGPT enhanced